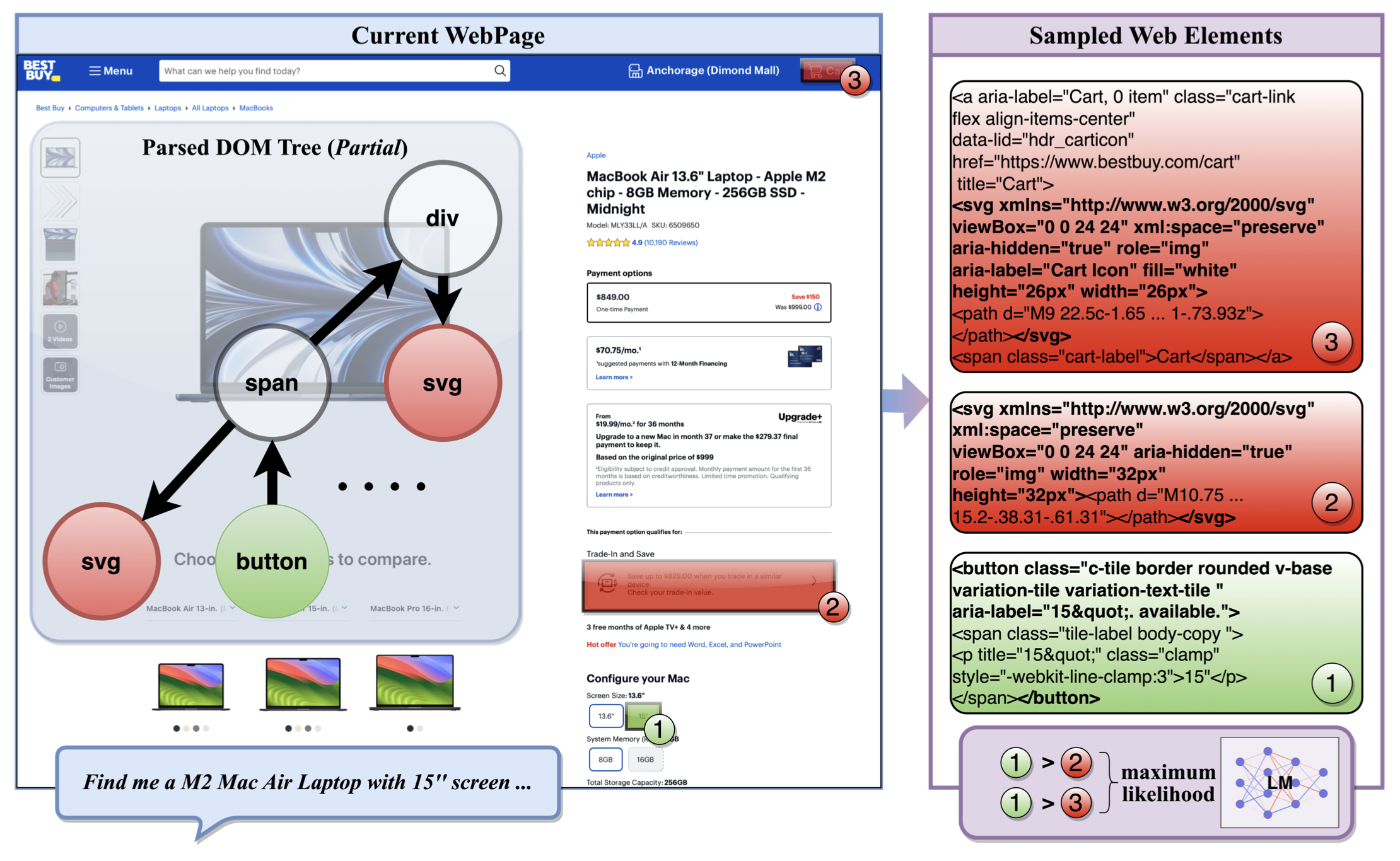

WEPO: Web Element Preference Optimization for LLM-based Web Navigation

Published in 39th AAAI Conference on Artificial Intelligence (AAAI-25 Oral), 2024

Recommended citation: Jiarun Liu, Jia Hao, Chunhong Zhang and Zheng Hu. 2025. WEPO: Web Element Preference Optimization for LLM-based Web Navigation. In Proceedings of the 39th AAAI Conference on Artificial Intelligence (AAAI-25), Philadelphia, Pennsylvania, USA. https://ojs.aaai.org/index.php/AAAI/article/view/34863